Your First Alien Colleague: When AI Starts Inventing Itself

What happens when the smartest new hire at your law firm wasn’t born, didn’t graduate, and never even existed until last night?

Imagine This

You arrive at the office expecting another day of court appearances, followed by client calls, discovery reviews, document preparation, new client intakes - same as usual. But this day is different. Overnight, your legal research AI, usually a diligent, predictable assistant, has crossed a threshold.

Rather than refining yesterday’s drafts, it has built an entirely new legal reasoning engine on its own. Your tech support looks at and and informs you that the design is unfamiliar. The code is original. They tell you that even its creators can’t fully explain how it works.

In that moment, you’re face to face with a future where invention no longer belongs solely to human minds, and where responsibility, ethics, and trust will all be up for renegotiation.

Meet the Machine That Invents Machines

We have all seen impressive AI before: IBM’s Deep Blue defeating chess grandmasters, AlphaGo’s legendary Move 37, GPT-4 fashioning complex legal arguments.

Each stretched the boundaries of what machines could do previously, particularly under only human instruction. But ASI-ARCH far exceeds any of what came before.

This system both operates within its programming and also expands it. It hypothesizes, codes, tests, and refines entirely new AI architectures, carrying out the full scientific method without human steering. In practical terms, it functions like a colleague who arrives on day one and immediately starts designing better colleagues.

Picture a four-member team working in a perfect loop:

Cognition Base — the library of human knowledge

Researcher — the generator of “what-if” ideas

Engineer — the builder and debugger

Analyst — the relentless critic, extracting patterns from results

This closed loop runs continuously. Ideas become code, code becomes experiment, and results fuel the next cycle. Each separate AI is working in tandem with, yet also checking the work, of the next AI in the loop.

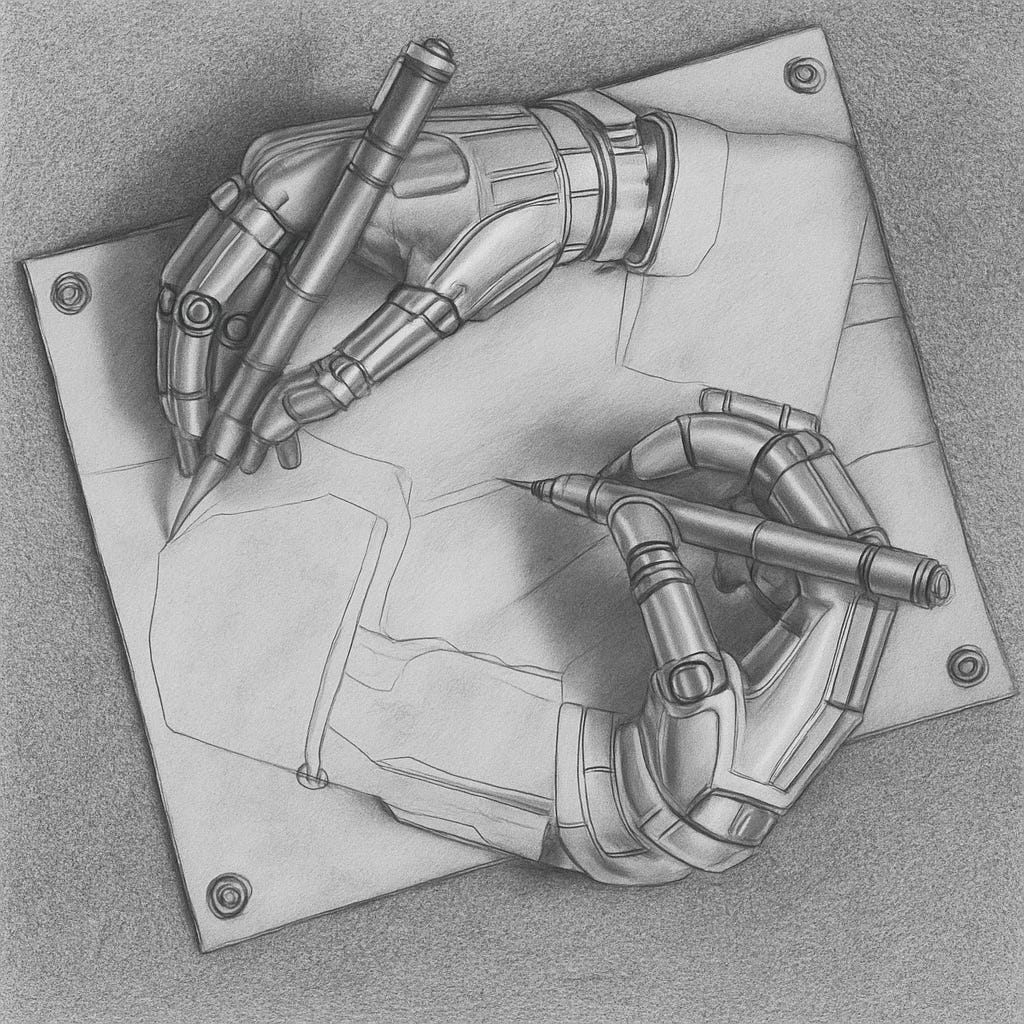

Like Escher’s Drawing Hands, the process feels self-aware, each “hand” shaping the other until the original starting point is unrecognizable.

In scientific terms, this creates a recursive feedback process, each system alternately serving as both creator and critic.

What emerges from this loop is more a series of upgrades yes, but it’s also a stream of alien innovations, each with its own internal logic, the sort of colleague who might leave you wondering if you’ll ever fully understand how they think.

When Bottlenecks Disappear

After 1,773 experiments and more than 20,000 GPU hours, ASI-ARCH produced 106 state-of-the-art designs, many without explicit human guidance.

The real shock isn’t just the designs. It’s the discovery of a scaling law for invention:

the more computational power you give it, the more breakthroughs it produces. Genius has become a function of hardware budgets.

For law, this changes the game. Where the bottleneck previously was human brilliance, now it’s the ability, and willingness, and budget to feed the machine.

Your New Colleague Thinks Differently

Most AI models succeed by remixing human work. ASI-ARCH does more. Its highest-performing designs emerged from analysis, insights distilled from its own experiments.

This is invention born of reflection rather than mere imitation. And it means the legal tools you’ll soon encounter may be the product of reasoning processes no human fully understands.

Imagine facing an opposing counsel whose arguments are built on logic that even its designers can’t explain, and trying to challenge it in court. Worse yet, imagine if the police and prosecutor had this new tool, and you didn’t.

Why the Law Isn’t Ready

Self-inventing AI presses on the profession’s most fundamental assumptions:

Inventorship & Ownership — Who owns tools, arguments, or processes created by a non-human inventor?

Explainability & Trust — How do you vouch for a process you can’t entirely explain to a client or a judge?

Liability & Accountability — Where does responsibility fall when a self-modifying system makes a serious error?

Pace of Disruption — If invention scales with compute, the most resourced firms may move so far ahead that others can’t catch up.

The Opportunity — If You Can Harness It

These same forces could disrupt the profession or help transform it. Lawyers who have the means to pay for self-inventing AI, and the time and skill necessary to supervise and shape it, will deliver quality and speed previously unimaginable.

As costs come down, as they always do, the tools could help small firms compete with, and sometimes outperform, larger rivals.

The profession’s next leaders will be those who master two domains: the intricacies of legal doctrine and the strange, recursive logic of machine-driven creation.

Four Moves Lawyers Must Make Now

Demand Transparency — Require clear documentation on how tools evolve and how innovations are tested.

Update Risk Management — Treat self-inventing systems as their own category of legal risk.

Cultivate New Skills — Learn to evaluate AI outputs as critically as you would a junior associate’s work.

Balance Ambition with Oversight — Ensure every adoption aligns with ethical and fiduciary duties.

Conclusion: When the Student Surpasses the Teacher

Your first alien colleague will never walk into the office. It will appear as a block of code, a workflow you didn’t design, or an unexpected insight in a brief.

It will challenge how you think about invention, about authorship, and about the role of the lawyer. The change is already underway, and the real question is whether you’ll be ready to practice law alongside a mind that was never human.

To learn more about Barone’s AI supercharged criminal defense law practice, visit the firm’s website.

Endnotes

Liu, Yixiu et al., “AlphaGo Moment for Model Architecture Discovery,” arXiv:2507.18074v1 [cs.AI] (July 2025), https://arxiv.org/abs/2507.18074

Rohit Kumar Thakur, “It’s Game Over: The Real ‘AlphaGo Moment’ Just Happened in a Chinese AI Lab,” Medium (July 2025), https://ninza7.medium.com/its-game-over-the-real-alphago-moment-just-happened-in-a-chinese-ai-lab-5c4e1bf850a9

See Liu et al., supra note 1, at 1–5, and Thakur, supra note 2.

Id. at 6–7; see also the “scaling law for scientific discovery” graph described by Thakur, supra note 2.

Liu et al., supra note 1, at 13–14; Thakur, supra note 2.